Q&A RAG 快速入门

Q&A RAG 快速入门

罗布斯# 什么是 RAG

RAG 全称:Retrieval-Augmented Generation

RAG 是一种使用额外数据增强 LLM 知识的技术。

最强大的应用程序 LLMs 之一是复杂的问答 (Q&A) 聊天机器人。这些应用程序可以回答有关特定源信息的问题。这些应用程序使用一种称为检索增强生成

(RAG) 的技术。

LLMs 可以对广泛的主题进行推理,但他们的知识仅限于公共数据,直到他们接受培训的特定时间点。如果要构建可以推理私有数据或模型截止日期后引入的数据的

AI 应用程序,则需要使用模型所需的特定信息来增强模型的知识。引入适当信息并将其插入模型提示符的过程称为检索增强生成 (RAG)。

# RAG 架构

典型的 RAG 应用程序有两个主要组件:

索引:用于从源引入数据并对其进行索引的管道。这通常发生在离线状态。

检索和生成:实际的 RAG 链,它在运行时接受用户查询并从索引中检索相关数据,然后将其传递给模型。

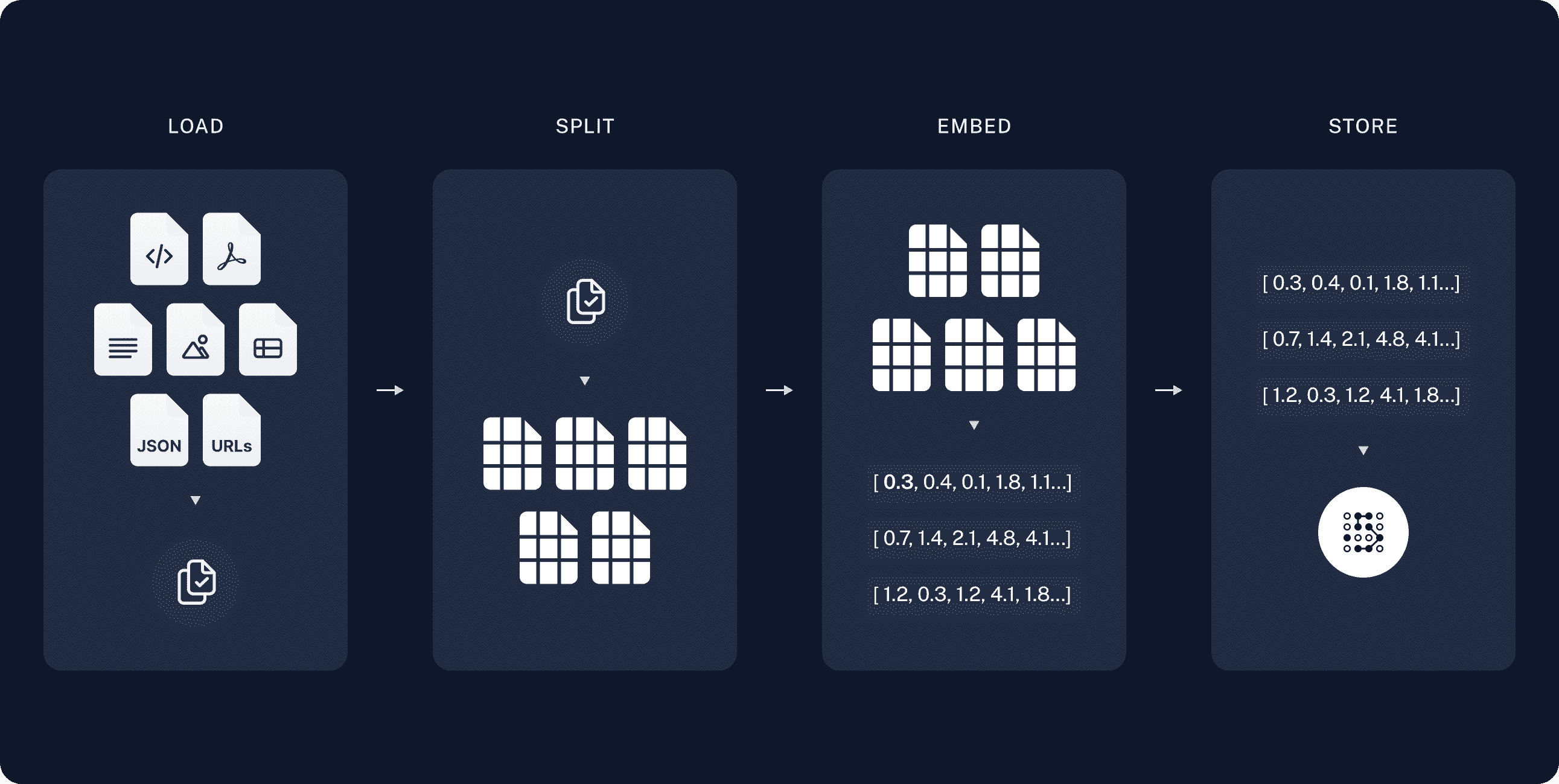

# Indexing 索引

加载:首先我们需要加载数据。这是使用 DocumentLoaders 完成的。

拆分:文本拆分器将大 Documents 块拆分为更小的块。这对于索引数据和将数据传递到模型都很有用,因为大块更难搜索,并且不适合模型的有限上下文窗口。

存储:我们需要某个地方来存储和索引我们的拆分,以便以后可以搜索它们。这通常是使用 VectorStore 和 Embeddings 模型完成的。

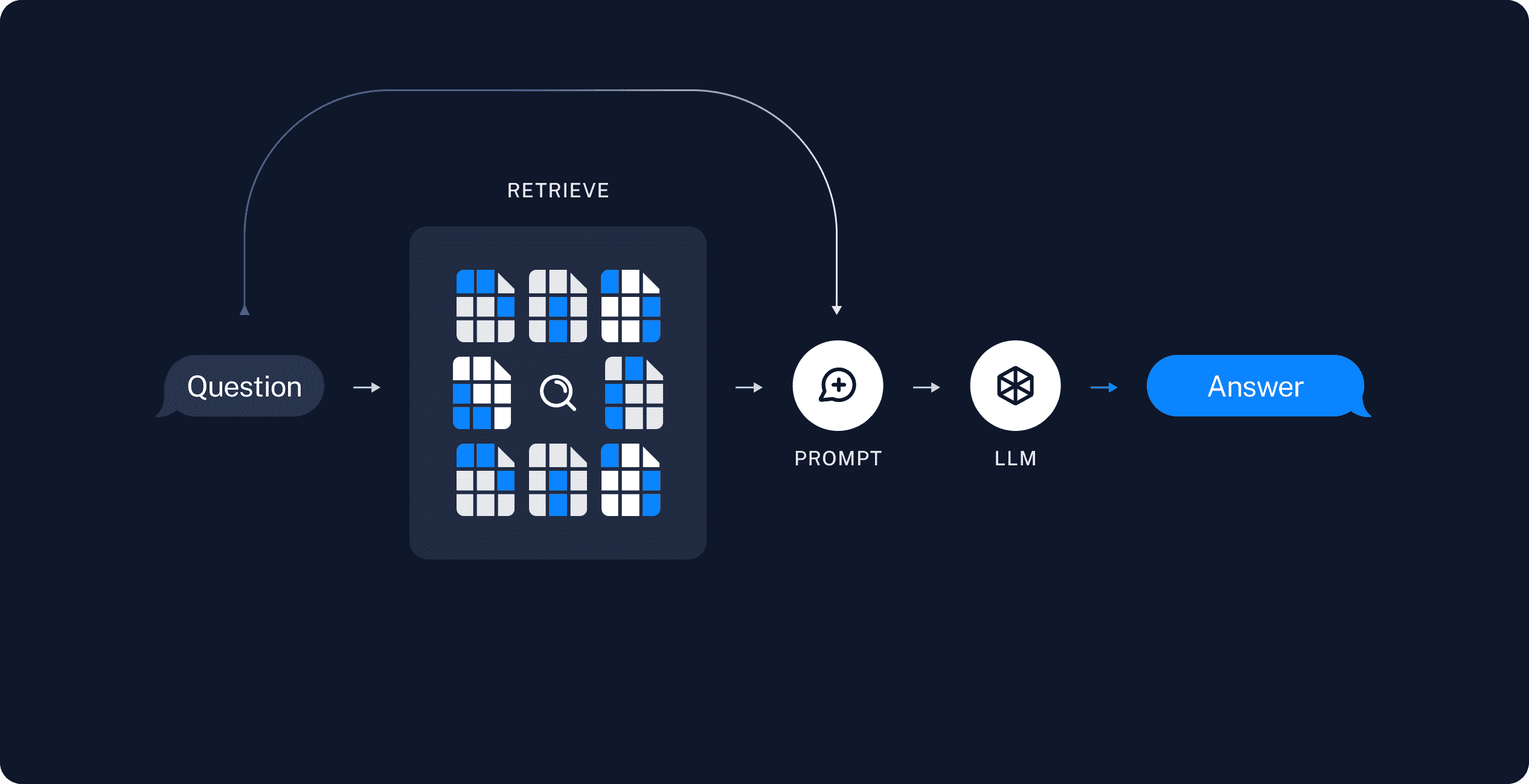

# Retrieval and generation 检索和生成

检索:给定用户输入,使用 Retriever 从存储中检索相关拆分。

生成:ChatModel / LLM 使用包含问题和检索数据的提示生成答案

# 实战代码

# 初始化环境

1 | import os |

# 向量化 初始化

1 | from langchain.embeddings import SentenceTransformerEmbeddings |

# 所需依赖准备

1 | # BeautifulSoup (通常缩写为:BS4) 用于解析 HTML 和 XML 文件。它提供了从 HTML 和 XML 文档中提取数据的功能,以及对解析树进行导航的工具。 |

# 核心代码

1 | # Load, chunk and index the contents of the blog. |

# invoke 调用

1 | rag_chain.invoke("What is Task Decomposition?") |

# 调用结果

1 | "Task Decomposition is a process that breaks down complex tasks into smaller, simpler steps. It utilizes techniques such as Chain of Thought, which instructs the model to think step by step, transforming difficult tasks into manageable ones and providing insight into the model's thought process. This can be achieved through simple prompting, task-specific instructions, or human inputs, and can be extended by exploring multiple reasoning possibilities using Tree of Thoughts." |

# Stream 式输出

1 | for chunk in rag_chain.stream("What is Task Decomposition?"): |

# 自定义 prompt

1 | from langchain_core.prompts import PromptTemplate |

# 输出结果

1 | 'Task decomposition involves breaking down complex tasks into smaller, more manageable subgoals. It helps in making it easier to understand, plan, and execute the overall task. This approach is useful in various fields, including project management, programming, and artificial intelligence.\n\nChallenges in long-term planning and task decomposition include managing dependencies between subgoals, coordinating resources, and adapting to changing circumstances. Addressing these challenges can lead to more efficient and effective task completion.\n\nThanks for asking!' |

评论

匿名评论隐私政策

TwikooGitalk

✅ 你无需删除空行,直接评论以获取最佳展示效果